Simple voltage pulse can restore capacity to Li-Si batteries

If you're using a large battery for a specialized purpose—say grid-scale storage or an electric vehicle—then it's possible to tweak the battery chemistry, provide a little bit of excess capacity, and carefully manage its charging and discharging so that it enjoys a long life span. But for consumer electronics, the batteries are smaller, the need for light weight dictates the chemistry, and the demand for quick charging can be higher. So most batteries in our gadgets start to see serious degradation after just a couple of years of use.

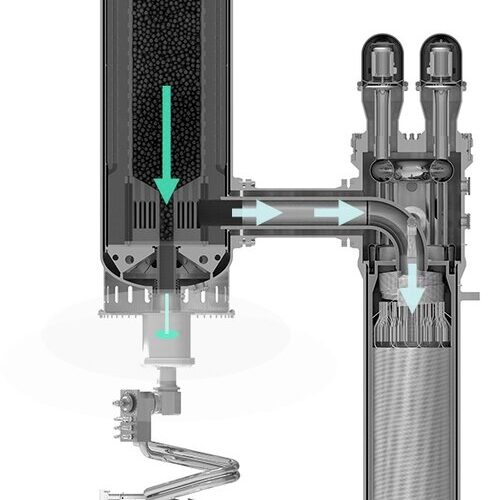

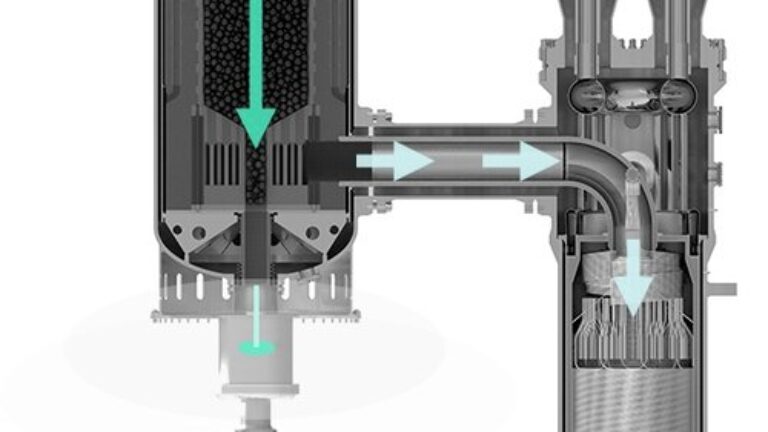

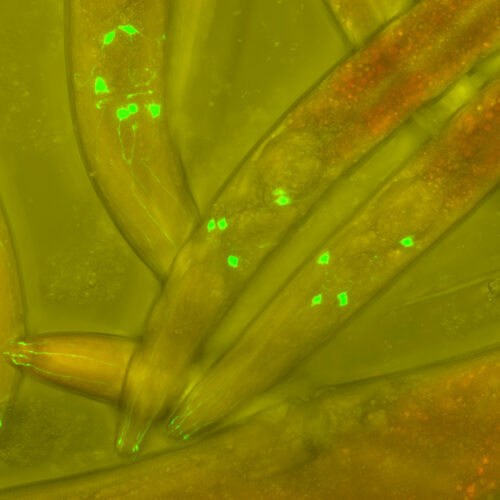

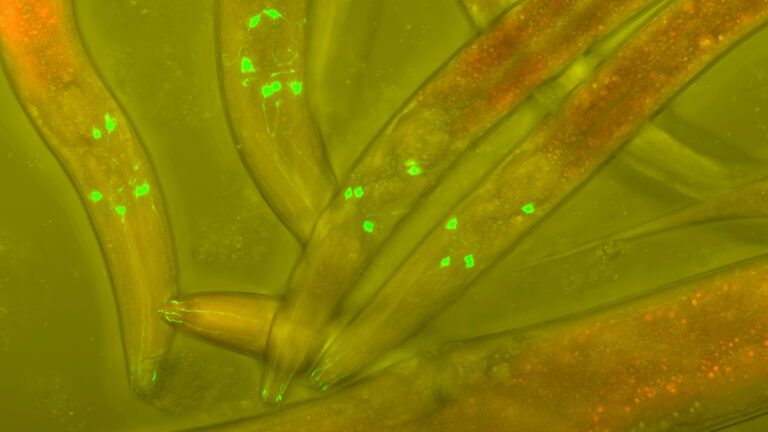

A big contributor to that is an internal fragmentation of the electrode materials. This leaves some of the electrode material disconnected from the battery's charge handling system, essentially stranding the material inside the battery and trapping some of the lithium uselessly. Now, researchers have found that, for at least one battery chemistry, it's possible to partially reverse some of this decay, boosting the remaining capacity of the battery by up to 30 percent.

The only problem is that not many batteries use the specific chemistry tested here. But it does show how understanding what's going on inside batteries can provide us with ways to extend their lifespan.

© da-kuk