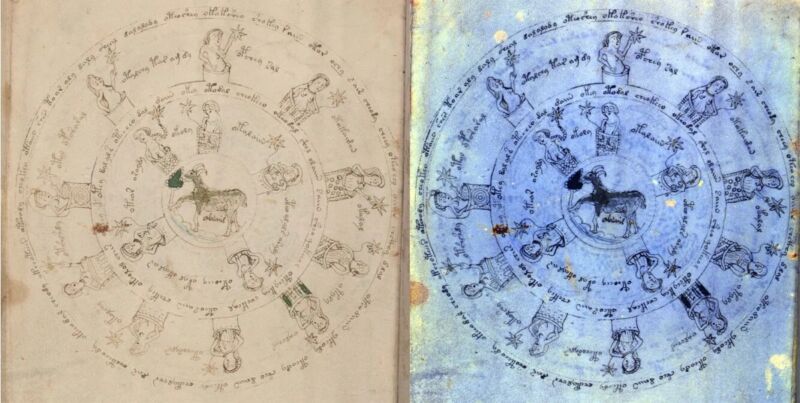

New multispectral analysis of Voynich manuscript reveals hidden details

Enlarge / Medieval scholar Lisa Fagin Davis examined multispectral images of 10 pages from the Voynich manuscript. (credit: Lisa Fagin Davis)

About 10 years ago, several folios of the mysterious Voynich manuscript were scanned using multispectral imaging. Lisa Fagin Davis, executive director of the Medieval Academy of America, has analyzed those scans and just posted the results, along with a downloadable set of images, to her blog, Manuscript Road Trip. Among the chief findings: Three columns of lettering have been added to the opening folio that could be an early attempt to decode the script. And while questions have long swirled about whether the manuscript is authentic or a clever forgery, Fagin Davis concluded that it's unlikely to be a forgery and is a genuine medieval document.

As we've previously reported, the Voynich manuscript is a 15th century medieval handwritten text dated between 1404 and 1438, purchased in 1912 by a Polish book dealer and antiquarian named Wilfrid Voynich (hence its moniker). Along with the strange handwriting in an unknown language or code, the book is heavily illustrated with bizarre pictures of alien plants, naked women, strange objects, and zodiac symbols. It's currently kept at Yale University's Beinecke Library of rare books and manuscripts. Possible authors include Roger Bacon, Elizabethan astrologer/alchemist John Dee, or even Voynich himself, possibly as a hoax.

There are so many competing theories about what the Voynich manuscript is—most likely a compendium of herbal remedies and astrological readings, based on the bits reliably decoded thus far—and so many claims to have deciphered the text, that it's practically its own subfield of medieval studies. Both professional and amateur cryptographers (including codebreakers in both World Wars) have pored over the text, hoping to crack the puzzle.