In July, Elon Musk made a bold prediction: that his artificial intelligence startup xAI would release “the most powerful AI in the world,” a model called Grok 3, by this December. The bulk of that AI’s training, Musk said, would happen at a “massive new training center” in Memphis, which he bragged had been built in 19 days.

[time-brightcove not-tgx=”true”]

But many residents of Memphis were taken by surprise, including city council members who said they were given no input about the project or its potential impacts on the city. Data centers like this one use a vast amount of electricity and water. And in the months since, an outcry has grown among community members and environmental groups, who warn of the plant’s potential negative impact on air quality, water access, and grid stability, especially for nearby neighborhoods that have suffered from industrial pollution for decades. These activists also contend that the company is illegally operating gas turbines.

“This continues a legacy of billion-dollar conglomerates who think that they can do whatever they want to do, and the community is just not to be considered,” KeShaun Pearson, executive director of the nonprofit Memphis Community Against Pollution, tells TIME. “They treat southwest Memphis as just a corporate watering hole where they can get water at cheaper price and a place to dump all their residue without any real oversight or governance.”

Some local leaders and utility companies, conversely, contend that xAI will be a boon for local infrastructure, employment, and grid modernization. Given the massive scale of this project, xAI’s foray into Memphis will serve as a litmus test of whether the AI-fueled data center boom might actually improve American infrastructure—or harm the disadvantaged just like so many power-hungry industries of decades past.

“The largest data center on the planet”

In order for AI models to become smarter and more capable, they must be trained on vast amounts of data. Much of this training now happens in massive data centers around the world, which burn through electricity often accessed directly from public power sources. A recent report from Morgan Stanley estimates that data centers will emit three times more carbon dioxide by the end of the decade than if generative AI had not been developed.

Read More: How AI Is Fueling a Boom in Data Centers and Energy Demand

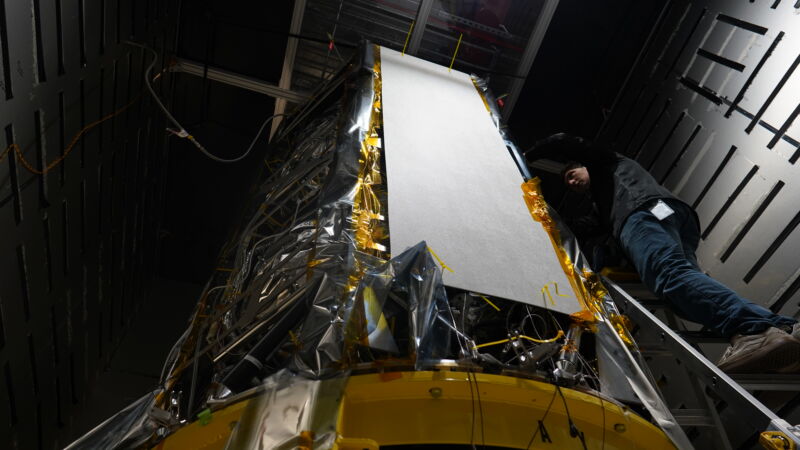

The first version of Grok launched last year, and Musk has said he hopes it will be an “anti-woke” competitor to ChatGPT. (In practice, for example, this means it is able to generate controversial images that other AI models will not, including Nazi Mickey Mouse.) In recent interviews, Musk has stressed the importance of Grok ingesting as much data as possible to catch up with his competitors. So xAI built its data center, called Colossus, in Southwest Memphis, near Boxtown, a historically Black community, to do a bulk of the training. Ebby Amir, a technologist at xAI, boasted that the new site was “the largest AI datacenter on the planet.”

Local leaders said the plant would offer “good-paying jobs” and “significant additional revenues” for the local utility company. Memphis Mayor Paul Young praised the project in a statement, saying that the new xAI training center would reside on an “ideal site, ripe for investment.”

But other local officials and community members soon became frustrated with the project’s lack of details. The Greater Memphis Chamber and Memphis, Gas, Light, and Water Division (MLGW) signed a non-disclosure agreement with xAI, citing privacy of economic development. Some Memphis council members heard about the project on the news. “It’s been pretty astounding the lack of transparency and the pace at which this project has proceeded,” Amanda Garcia, a senior attorney at the Southern Environmental Law Center, says. “We learn something new every week.”

For instance, there’s a major divide between how much electricity xAI wants to use, and how much MLGW can provide. In August, the utility company said that xAI would have access to 50 megawatts of power. But xAI wants to use triple that amount—which, for comparison, is enough energy to power 80,000 households.

MLGW said in a statement to TIME that xAI is paying for the technical upgrades that enable them to double their power usage—and that in order for the company to reach the full 150 megawatts, there will need to be $1.7 million in improvements to a transmission line. “There will be no impact to the reliability of availability of power to other customers from this electric load,” the company wrote. They also added that xAI would be required to reduce its electricity consumption during times of peak demand, and that any infrastructure improvement costs would not be borne by taxpayers.

In response to complaints about the lack of communication with council members, MLGW wrote: “xAI’s request does not require approvals from the MLGW Board of Commissioners or City Council.”

But community members worry whether Memphis’s utilities can handle such a large consumer of energy. In the past, the city’s power grid has been forced into rolling blackouts by ice storms and other severe weather events.

And Garcia, at the SELC, says that while xAI waits for more power to become available, they’ve turned to non-legal measures to sate their demand, by installing gas combustion turbines on the site that they are operating without a permit. Garcia says the SELC has observed the installation of 18 such turbines, which have the capacity to emit 130 tons of harmful nitrogen oxides per year. The SELC and community groups sent a letter to the Shelby County Health Department demanding their removal—but the health department responded by claiming the turbines were out of their authority, and referred them to the EPA. The EPA told NPR that it was “looking into the matter.” A representative for xAI did not immediately respond to a request for comment.

Much of Memphis is already smothered by harmful pollution. The American Lung Association currently gives Shelby County, which contains Memphis, an “F” grade for its smog levels, writing, “the air you breathe may put your health at risk.” A local TV report this year named Boxtown the most polluted neighborhood in Memphis, especially during the summer.

Boxtown and its surrounding neighborhoods have historically suffered from poverty and pollution. Southwest Memphis’s cancer rate is four times the national average, according to a 2013 study, and life expectancy in at least one South Memphis neighborhood is 10 years lower than other parts of the city, a 2020 study found. The Tennessee Valley Authority has been dumping contaminated coal ash in a nearby landfill. And a Sterilization Services of Tennessee facility was finally closed last year after emitting ethylene oxide into the air for decades, which the EPA linked to increased cancer risk in South Memphis.

A representative for the Greater Memphis Chamber, which worked to bring xAI to Memphis, wrote to TIME in response to a request for comment: “We will not be participating in your narrative.”

Potential impact on water

Environmentalists are also concerned about the facility’s use of water. “Industries are attracted to us because we have some of the purest water in the world, and it is dirt cheap to access,” says Sarah Houston, the executive director of the local environmental group of the nonprofit Protect Our Aquifer.

Data centers use water to cool their computers and stop them from overheating. So far xAI has drawn 30,000 gallons from the Memphis Sand Aquifer, the region’s drinking water supply, every day since beginning its initial operations, according to MLGW—who added that the company’s water usage would have “no impact on the availability of water to other customers.”

But Houston and other environmentalists are concerned especially because Memphis’s aging water infrastructure is more than a century old and has failed several winters in a row, leading to boil advisories and pleas to residents to conserve water usage during times of stress. “xAI is just an additional industrial user pumping this 2,000 year old pure water for a non-drinking purpose,” Houston says. “When you’re cooling supercomputers, it doesn’t seem to warrant this super pure ancient water that we will never see again.”

Memphis’s drinking water has also been threatened by contamination. In 2022, the Environmental Integrity Project and Earthjustice claimed that a now-defunct coal plant in Memphis was leaking arsenic and other dangerous chemicals into the groundwater supply, and ranked it as one of the 10 worst contaminated coal ash sites in the country. And because xAI sits close to the contaminated well in question, Houston warns that its heavy water usage could exacerbate the problem. “The more you pump, the faster contaminants get pulled down towards the water supply,” she says.

MLGW contends that xAI’s use of Memphis’s drinking water is temporary, because xAI is assisting in the “ the design and proposed construction” of a graywater facility that will treat wastewater so that it can be used to cool data centers machines. MLGW is also trying to get Musk to provide a Tesla Megapack, a utility-scale battery, as part of the development.

Houston says that these solutions will be beneficial to the city—if they come to fruition. “We fully support xAI coming to the table and being a part of this solution,” she says. “But right now, it’s been empty promises.”

“We’re not opposed to ethical economic development and business moving into town,” says Garcia. “But we need some assurance that it’s not going to make what is already an untenable situation worse.”

Disproportionate harm

For Pearson, of Memphis Community Against Pollution, the arrival of xAI is concerning because as someone who grew up in Boxtown, he says he’s seen how other major corporations have treated the area. Over the years, Memphis has dangled tax breaks and subsidies to persuade industrial companies to set up shop nearby. But many of those projects have not led to lasting economic development, and have seemingly contributed to an array of health problems of nearby residents.

For instance, city, county and state officials lured the Swedish home appliance manufacturer Electrolux to Memphis in 2013 with $188 million in subsidies. The company’s president told NPR that it intended to provide good jobs and stay there long-term. Six years later, the company announced it would shut down their facility to consolidate resources for another location, laying off over 500 employees, in a move that blindsided even Mayor Jim Strickland. Now, xAI has taken over that Electrolux plant, which spans 750,000 square feet.

“Companies choose Memphis because they believe it is the path of least resistance: They come here, build factories, pollute the air, and move on,” Pearson says.

Pearson says that community organizations of southwest Memphis have had no contact or dialogue with xAI and its plans in the area whatsoever; that there’s been no recruiting in the community related to jobs, or any training related to workplace development. When presented with claims that xAI will economically benefit the local community, he harbors many doubts.

“This is the same playbook, and the same talking points passed down and passed around by these corporate colonialists,” Pearson says. “For us, it is empty, it’s callous, and it’s just disingenuous to continue to regurgitate these things without actually having plans of implementation or inclusion.”